Understanding Metrics Percentiles

Introduction

When working with production systems, we usually track metrics like latency and throughput to understand system performance. But, sometime (and I mean most of the time), those metrics can give a false sense of good performance.

Well, not the metrics themselves but how we reason about them and how they get used and visualized.

In the amazing blog somethingsimilar: notes on distributed systems for the young bloods: Jeff Hodges makes a point about metrics and percentiles:

Metrics are the only way to get your job done. Exposing metrics is the only way to bridge the gap between what you believe your system does in production and what it actually does.

Use percentiles, not averages. Percentiles are more accurate and informative than averages. Using a mean assumes that the metric under evaluation follows a bell curve.

“Average latency” is a commonly reported metric, but I’ve never once seen a distributed system whose latency followed a bell curve.

This is a very valid point. Personally, I fell in this issue countless time early in my career, largely from a poor understanding of the statstics part of this and how to interpret system performance data.

Imprecise Metric

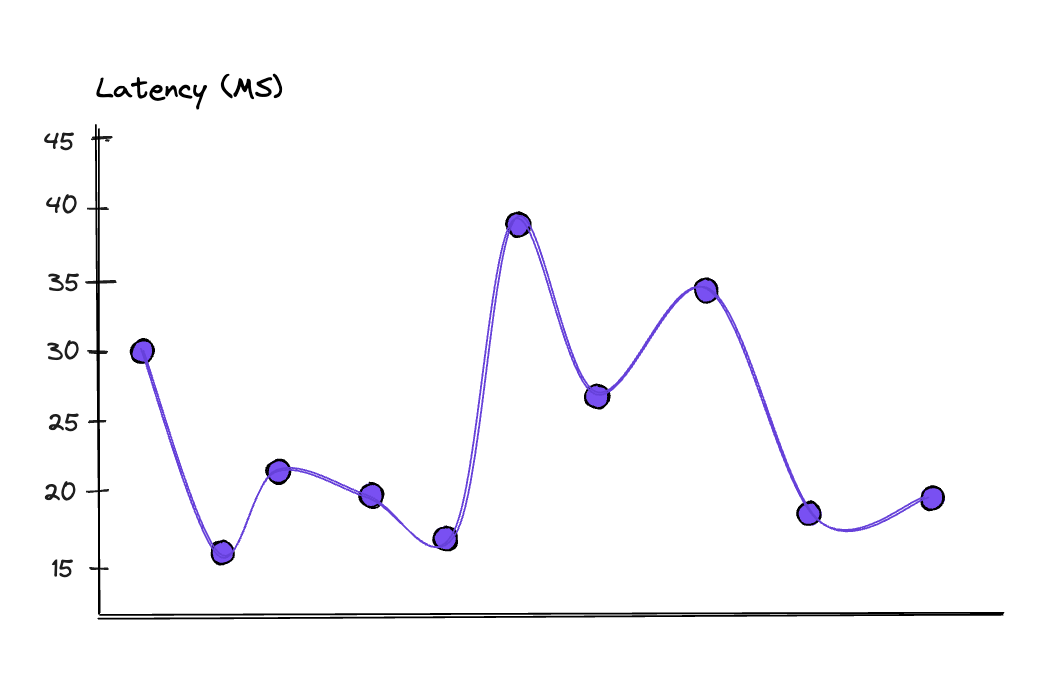

Taking a hands-on approach on this, let's assume we just deployed a new API where we serve some calculations and are required to complete these calculations in under 25ms.

[30, 15, 21, 18, 16, 40, 25, 35, 17, 18]

We can easily plot this as a line chart:

We can also calculate the average latency with the following formula:

avg=sum(latency)count(latency)

avg=15+18+17+18+16+21+25+30+35+4010

avg=23.5ms

So, looking at the average for our API, it shows 23.5 ms, which means our API is meeting expectations and everything is fine.

But this is misleading, and once we have a deeper look at the data we will realize we are not even close to meeting the expectations and only percentiles can give us the full picture.

Using Percentiles to Understand Performance

Calculating Percentiles

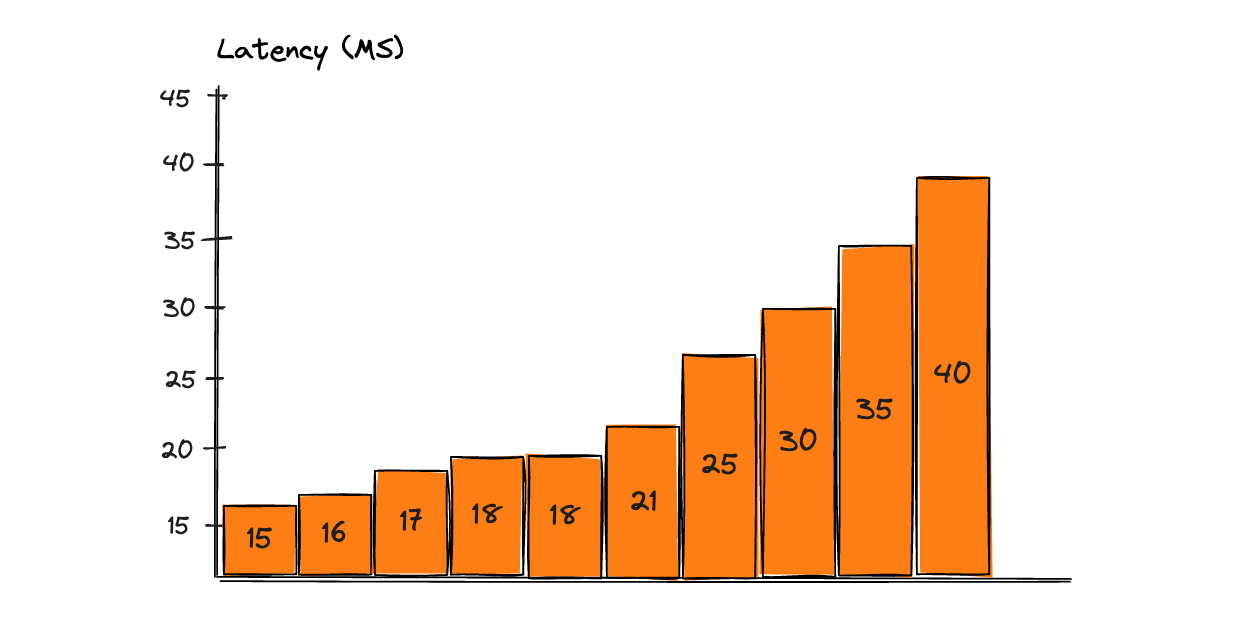

The concept of percentiles is straightforward. You sort your dataset in ascending order and determine which quantile you are interested in.

This can be calcualted with these formulas

rank=⌊Quantile∗(length(Latency)+1)⌋

PQuantile=Latencyrank

Usually in monitoring systems, we are interested in higher quantiles.

P50, P75, P90, and P99

Sorting our dataset, it now looks like this:

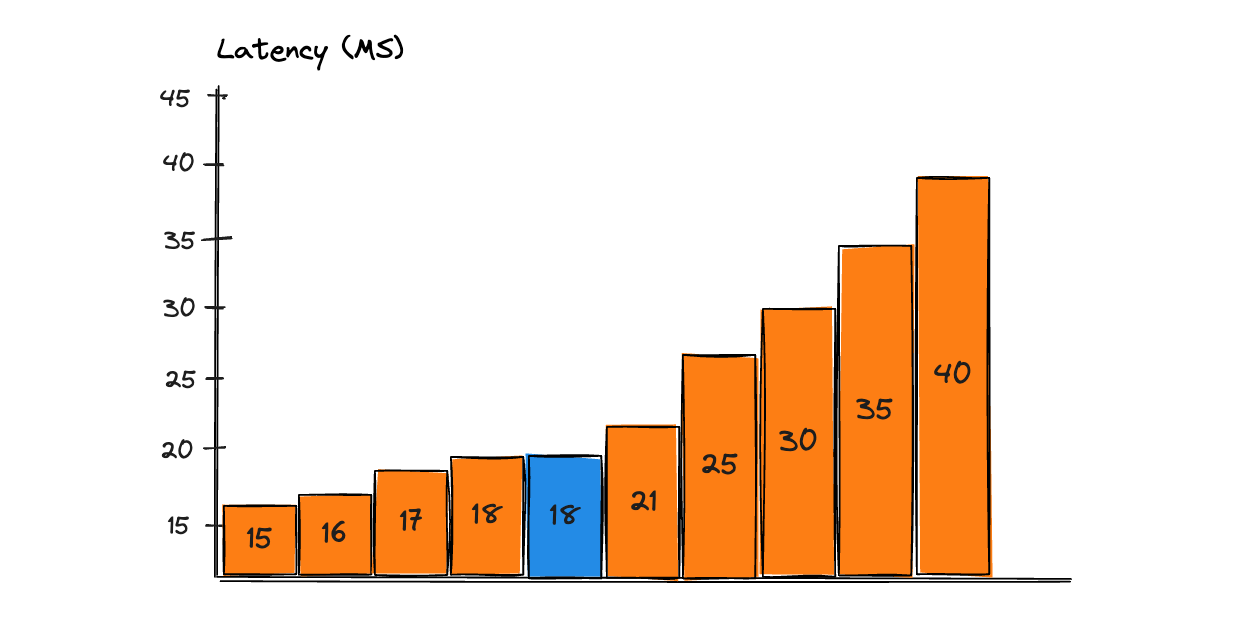

P50

The first percentile we'll calculate is the 50th quantile (median), which is the "middle" value in the sorted dataset.

Applying the numbers on the previous formula:

rank=⌊0.5∗(10+1)floor

rank=5

P50=Latencyrank

P50=18ms

So, the P50 shows us that 50% of our requests are meeting our 25ms latency threshold.

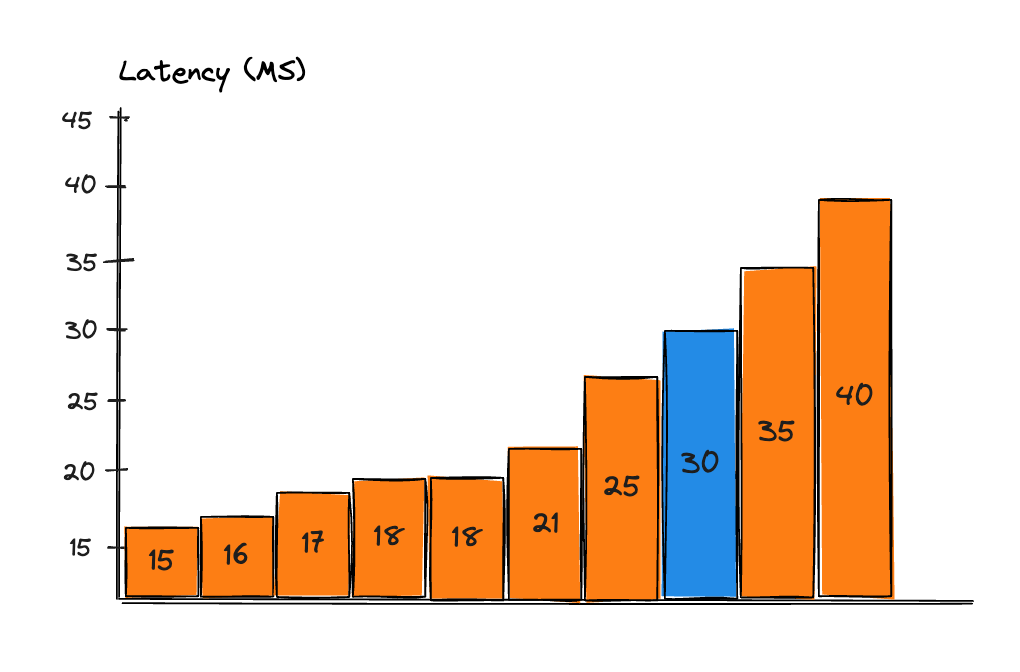

P75

Applying the same formula to calculate the 75th position:

rank=⌊0.75∗(10+1)floor

rank=8

P75=30ms

This tells us that at least 25% of our requests are exceeding our threshold.

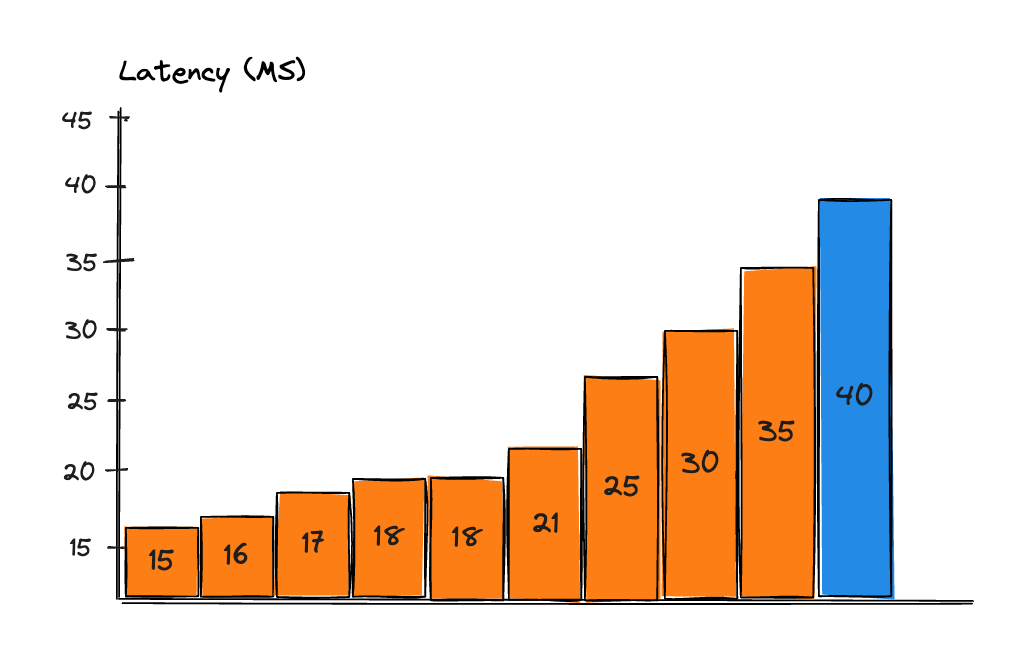

P90

By applying the same formula to calculate the P90, P99, and P99.9 quantiles, we gain more insights into our system's performance.

The P90 shows us that we have 10% of our requests are taking at least 40ms which is nearly double of our average latency.

Full Picture

Putting together all the information we got gives us a comprehensive view of our system's performance:

50% of our requests are meeting our threshold.

At least 25% of our requests are exceeding our threshold.

25% of requests are over 30ms.

10% of requests are nearly double our average latency.

With this information, we can reason about our system's performance and take concrete actions to improve it.

Takeaway

The main takeaway is to use percentiles as much as possible. Generally speaking, when you are monitoring a system, you shouldn't rely on a single angle. The performance data you extract from the system might seem alright (this is the easy bit, right?), but how you represent, interpret, and visualize the data is what truly matters.

If you can't understand the full picture of a system, you will fall into the same trap as many others. Believing that your interpretation of how the system works matches how it actually works.